We present DreamBeast, a novel method based on score distillation sampling (SDS) for generating fantastical 3D animal assets composed of distinct parts.

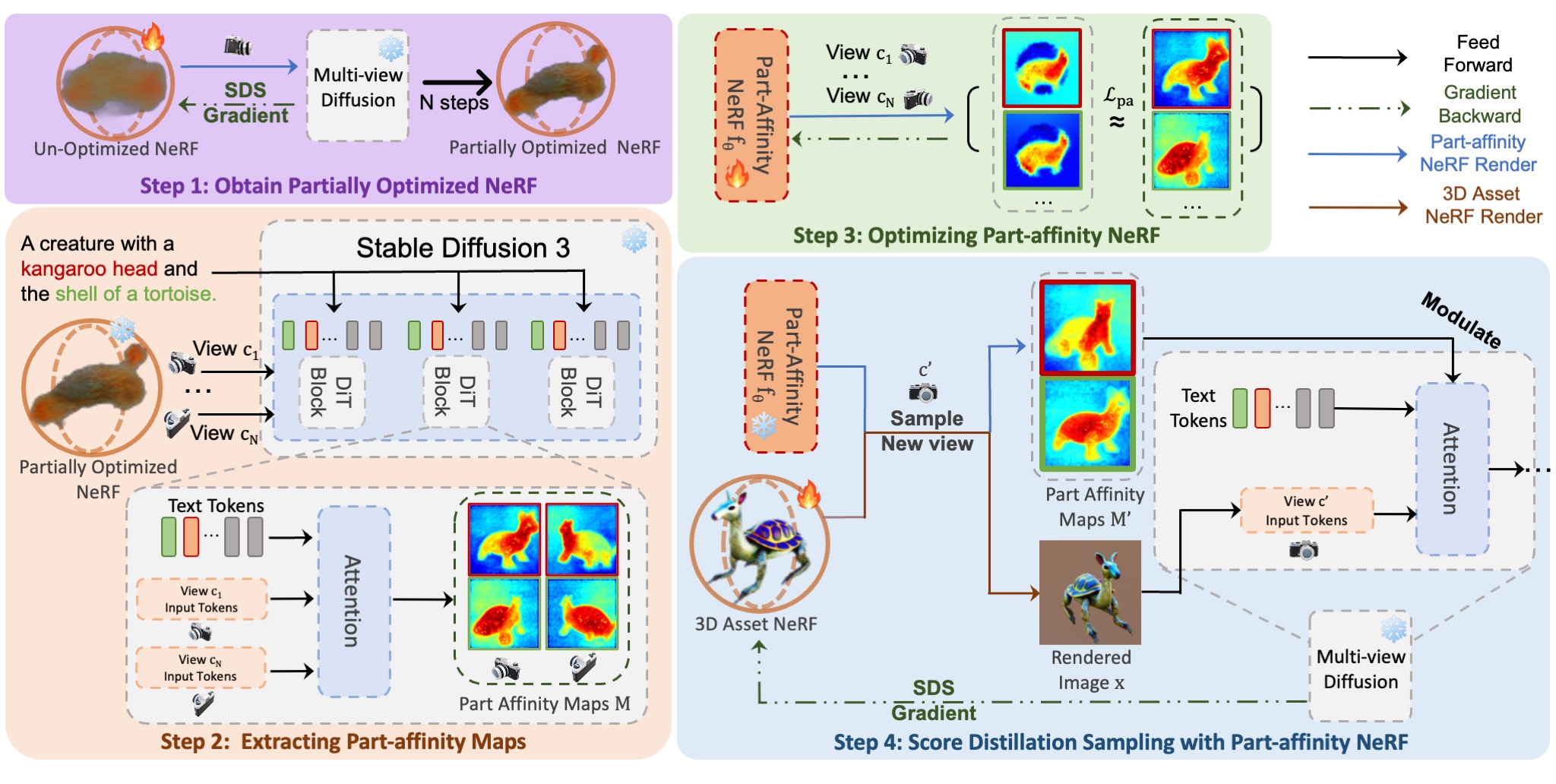

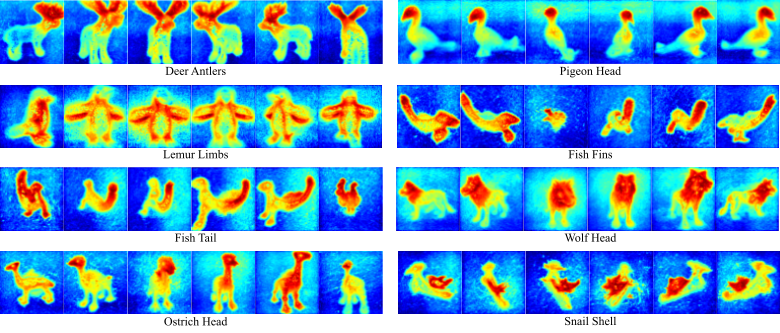

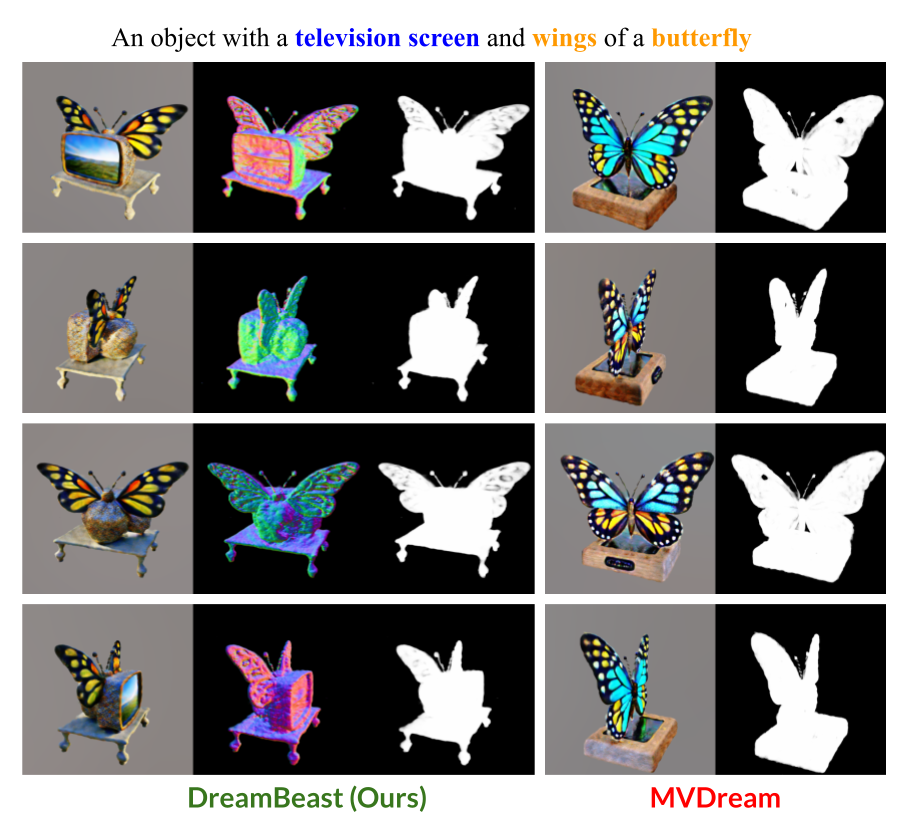

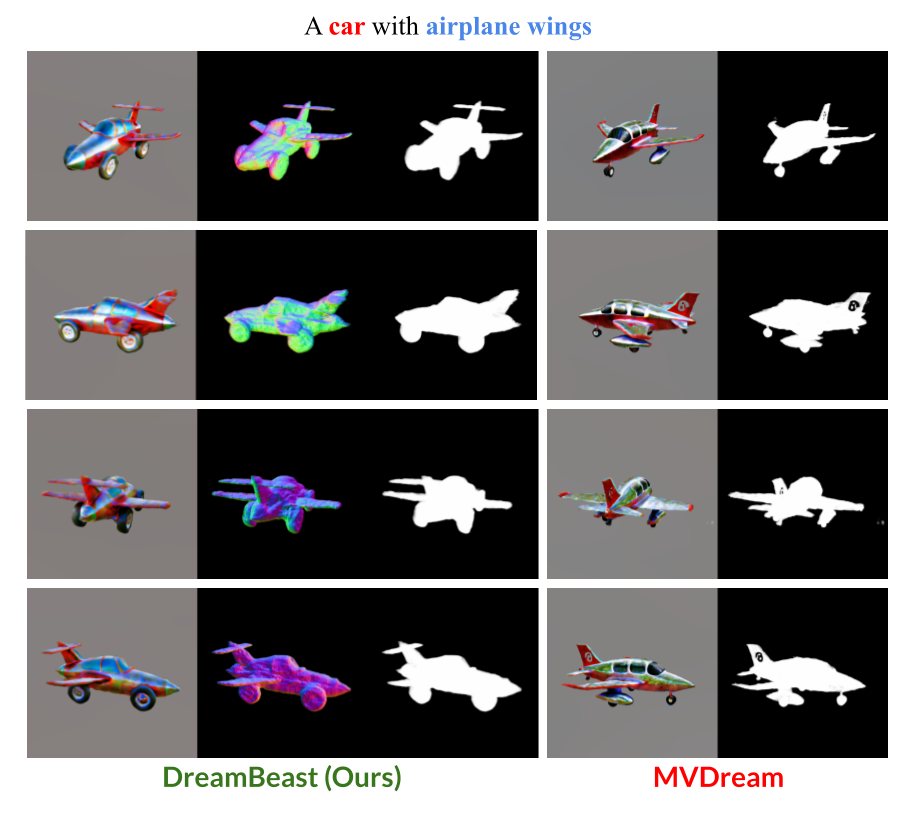

Existing SDS methods often struggle with this generation task due to a limited understanding of part-level semantics in text-to-image diffusion models. While recent diffusion models, such as Stable Diffusion 3, demonstrate a better part-level understanding, they are prohibitively slow and exhibit other common problems associated with single-view diffusion models. DreamBeast overcomes this limitation through a novel part-aware knowledge transfer mechanism. For each generated asset, we efficiently extract part-level knowledge from the Stable Diffusion 3 model into a 3D part-affinity implicit representation. This enables us to instantly generate part-affinity maps from arbitrary camera views, which we then use to modulate the guidance of a multi-view diffusion model during SDS to generate 3D assets of fantastical animals.

DreamBeast significantly enhances the quality of generated 3D creatures with user-specified part compositions while reducing computational overhead, as demonstrated by extensive quantitative and qualitative evaluations.

@article{li2024dreambeast,

title={DreamBeast: Distilling 3D Fantastical Animals with Part-Aware Knowledge Transfer},

author={Li, Runjia and Han, Junlin and Melas-Kyriazi, Luke and Sun, Chunyi and An, Zhaochong and Gui, Zhongrui and Sun, Shuyang and Torr, Philip and Jakab, Tomas},

journal={arXiv preprint arXiv:2409.08271},

year={2024}

}